Thanks to the continuous improvement, development and boundary pushing advances in real-time and animation technology, a new era of hyper-realistic digital humans across games, film and TV is being introduced.

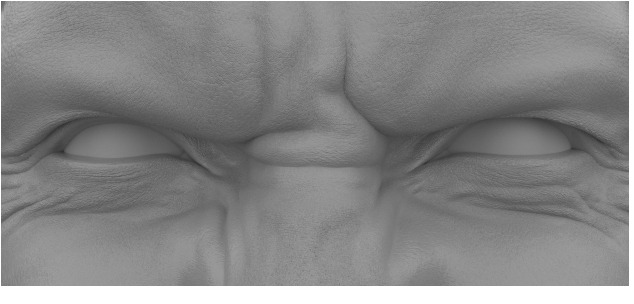

As an intrinsic form of communication between human beings, as in the animal kingdom, we are uniquely conditioned to recognize the slightest of subtle facial cues and microexpressions. Put simply, it’s the imperfections within an individual that make things seem ‘real’. This makes it incredibly difficult to replicate, cross and track the uncanny valley, which is characterised as the eerie or unsettling feeling that people experience in response to not-quite-human figures, with standard animation control-rig workflows.

This traditional approach to creating digital humans is becoming more time-consuming to achieve the fidelity that’s now required and expected as standard. Over recent years, studios have introduced new sophisticated technologies to surpass the uncanny valley, essential to be able to create high-quality, believable digital humans.

Now more than ever, studios must integrate new tools and technologies into their existing workflows in order to improve the quality of digital human projects. At Goodbye Kansas, we have seen a huge demand for realistic digital human faces spanning TV, film and game projects and to keep our competitive edge, we have been adopting a range of new technologies in order to streamline and advance the production of digital humans, including using in-house 3D scanning methods, integrating the Universal Scene Description (USD) framework, and considering the subtle observable physical properties of the human face

3D Scanning for High-Fidelity Digital Faces

Using our in-house 3D scanning facility, we capture high-fidelity data to develop facial rigs for our digital human projects.

One of our most recent projects integrating this workflow is the cinematic trailer for Ubisoft’s triple-A game release, Skull and Bones. Here, we were able to create the most faithful depiction of the face, and details of the protagonist’s face that evoke emotion by making full use of our integrated workflows, truly enabling our teams to push the boundaries of digital human production.

Integrating USD Workflows

By integrating the Universal Scene Description (USD) framework into our digital human projects, it’s becoming easier to collaborate and iterate on facial rigs. Our new facial tools support USD files as input and rigs can be represented as a single asset, with individual blendshapes referenced as sublayers. With features including 3D overviews in the USD viewer, easy switch between variants and versions, and the possibility to add parameters to specific blendshapes, the USD process shows many promises for efficient handling of facial rigs in the future.

Making the Perfect Face

The key to creating a perfect digital human face is by tapping into the often overlooked intricate, minute details. In digital human creation, one of the defining features are sticky lips. We take a look into the fine details of the mouth and how it behaves when moving and its relation to the perceived realism of a digital human, and the subtle effect of stickiness between the lips being an important factor and making all the difference in the perceived realism of a digital human.

Another useful consideration in the production of digital humans is dynamic skin microstructure. The human face is an incredibly complex structure, with expressions unique to individuals. The skin of a human is highly dynamic and facial expressions deform the skin microstructure in different ways. At Goodbye Kansas, we have found that the method to achieve the most lifelike result is by using dynamically filtered displacement maps based on the deformation of the underlying skin mesh. Our look development and lighting artists then use the resulting displacement and tension data to drive facial effects such as skin stretching and blood flow.

With a population boom in digital humans, there has been increasing industry demand across films, games and TV along with higher expectations to create the perfect face. At Goodbye Kansas Studios, we have been harnessing our new suite of production-ready tools for digital human production alongside new technologies such as USD, the team are building a strong toolbox in our production pipeline to allow our artists to develop more detailed high-end characters than before.

Rasmus Haapaoja and Josefine Klintberg are software engineers at Goodbye Kansas Studios.

Goodbye Kansas Studios offers award-winning and uniquely integrated services for feature films, TV series, commercials, games and game trailers. Expertise includes VFX, FX, CG productions, 3D face & body scanning, digital humans, creature & character design, performance capture, animation and real-time techniques. The company, with a staff of 250+ is part of Goodbye Kansas Group AB (publ), listed on the Nasdaq First North Growth Market and with studios and offices in Stockholm, London, Helsinki, Vilnius, Belgrade, Beijing and Los Angeles.

Win a Funko X Lilo & Stitch Prize Pack!

Win a Funko X Lilo & Stitch Prize Pack!