This year at SIGGRAPH we had a few standouts for advances in technology, but also for paradigm shifts in terms of services for at least the media & entertainment realms of computer graphics. I didn’t have much time to attend many panels or talks, but I did get to moderate a panel for Autodesk regarding some changes in how visual effects production is accomplished as more and more people and companies have to contribute to the process.

Now, to the good stuff:

NVidia Quadro RTX Series. New Turing architecture combines RT Core for ray tracing and Tensor Cores for AI deep learning into something severely powerful. The ray trace demos in Unreal engine and beta-versions of Arnold GPU were impressive, but it doesn’t stop there. The GPU accelerates computations for upscaling images, interpolating footage for slo-mo, and crunching massive data sets. Their example is analyzing wind data in a city to show what would happen if a new building is erected. Everyone is climbing aboard this bandwagon to make sure they support the new tech.

RenderMan Demo for Coco’s Underworld. Really, this was part of the entire Renderman Science Fair presentation for RenderMan’s latest version and how it’s being implemented in various host software. But the live presentation of Coco’s Underworld was the clincher. Using USD Hydra to feed the scene, we start out in the train station, moving the camera around and seeing near immediate progressive rendering of the new view (in a non-textured mode to be fair) — assisted by the RTX tech. We move all through the scene, into a newsstand to look at the papers. Zoom out to see the entire station. But move back out of the window to reveal that we are in the entire Underworld. Gajillions of polys. Working in close to real time — or at least reasonably so. A layout artist’s dream.

VICON Origin – Location Based Virtual Reality. Put on six trackers — two feet, two hands, one on a backpack, one on the VR headset — and you are in a world that you can freely move around in (well, the size of the motion-capture volume). Not only that, but you are in there with a couple other people, all of you enjoying a shared experience, walking through catacombs, batting at a baseball game, crossing creaky bridges. You even have a torch that you hand back and forth to one another. Probably the most compelling VR experience I’ve had the pleasure to have.

HP Mars Home Planet: The VR Experience. I was invited to go to Mars. Through a VR headset and a motion-based pod, I got to travel around what a Mars installation might look like. While immersive, the experience was more like the Haunted Mansion at Disneyland. I really wanted to get up and explore. Kudos to the team who put it together, and who took input from. You can check out a sample here

Allegorithmic Project Alchemist. Not quite ready for primetime, but looks so Dedicated to Augmented Material Creation — which is a blend of Procedural/Capture/Artistry/AI. In contrast to Designer to develop procedural Substances, and Painter to apply textures and Substances to models. Alchemist is really a sandbox to play with ideas interactively through browsing the Substances and modifying with parameter sliders. Or maybe you want to change a look based on a color scheme you’ve seen: You can import the image and Alchemist will modify the Substance to match. Or you can create something from scratch, stacking up procedures on top of a base photograph to derive a brand new Substance. The TensorFlow AI is working underneath to enhance new tools like delighting, tiling, etc. There is a presentation as part of Substance Day here.

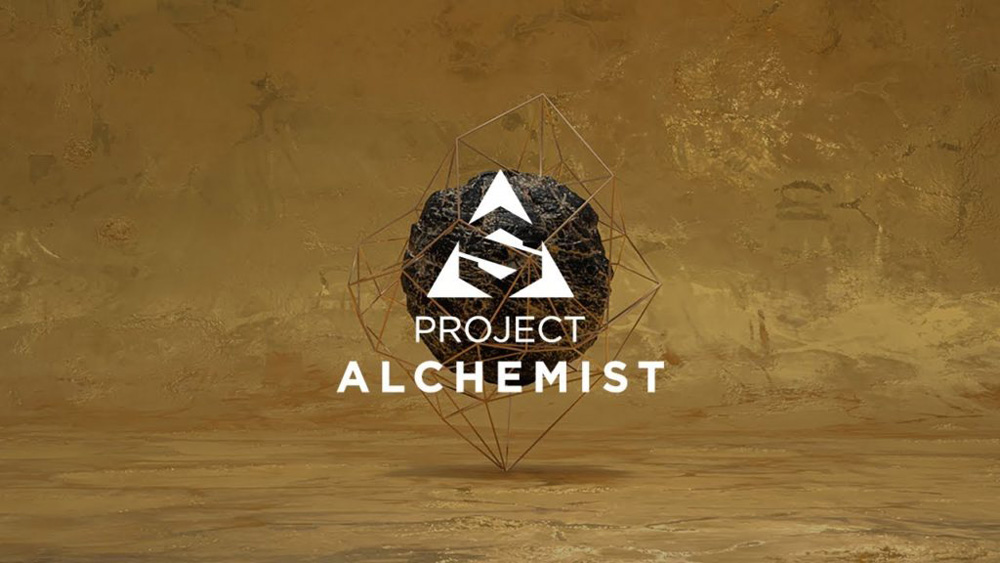

Using CG for Good. I met a fellow from Alberta — a lawyer by trade. He created a company called Veritas Litigation Support, which uses CG to visualize complex concepts in legal cases; things like showing a propagation of a gas leak through a valley to prevent a gas corporation from burying barrels in a particular area. Or perhaps showing the comparison of chemical and physical iterations of nanotech in a patent case. Using what we do in movies in a way that affects real people and real things really got me thinking … a lot.

Digital Domain’s Thanos Facial Animation System. Deep Learning is really the thing of you haven’t noticed. Digital Domain has implemented it in production for Thanos in Infinity War — using DL to assist in analyzing Josh Brolin and learning what he should look like — applying it to high resolution meshes. If I were a betting man, I would put money on Digital Domain getting an Oscar for their work.

3Delight’s Hydra NSI and Cloud Rendering. Amongst the cloud discussions – both VMs and rendering, 3Delight popped up with a presentation of not only incorporating Pixar’s Hydra API, but implementing it in a Node Scene Interface (NSI) along with Open Shading Language to send scene descriptions to 3Delight, and then spun up in the cloud for rendering. This was demo’d both through Autodesk’s Maya and Foundry’s Katana — spinning up servers on Amazon.

As always – Technical Papers Fast Forward is the place to go to see what will be implemented in the next couple years. But actually going to the presentation is an assault on the brain. Too much science! You can see (most of) the presentations here

Todd Sheridan Perry is a vfx supervisor and digital artist who has worked on many acclaimed features such as Black Panther, The Lord of the Rings: The Two Towers, Speed Racer and Avengers: Age of Ultron. You can reach him at todd@teaspoonvfx.com.

Todd Sheridan Perry