|

Getting your Trinity Audio player ready...

|

How five higher education courses in different universities are teaching students about new applications of artificial intelligence in their fields.

Every week now, we hear tales of how artificial intelligence will transform different aspects of society. “Disruptive” seems to be the description du jour. What does that mean for educators in the arts?

Teachers have an understandable aversion to using AI tools that were built on prior creative works without permission. Fortunately, toolmakers are emerging who assert that their tools are being trained on ethical sources. Although AI platforms of questionable origin continue to proliferate, enough reliable choices exist to reassure schools that some tools are ready for class time. And prominent colleges are responding by experimenting with them in varied and interesting ways.

![Hors Bonsai [CalArts]](https://www.beta.animationmagazine.net/wordpress/wp-content/uploads/Horse-Bonzai-01.jpg)

California Institute of the Arts

Douglas Goodwin is an interdisciplinary artist who’s taught at CalArts in Santa Clarita for over 18 years. This year, he’s teaching 16 Masters-level students “AI for Experimental Animation,” which includes working with AI for writing, previsualization, frame interpolation, motion capture and tracking.

It’s actually Goodwin’s second time teaching this course. His inaugural class had both graduate and undergraduate students, and it attracted a long waiting list for this year. “We went from a novelty that was sort of fun, and the students did lots of small creative experiments,” he recalls. “They said, ‘This is cool, but I don’t see a lot here for me yet.’ But this year, we’ve moved on to work that they did resonate with. It was a big change.”

Some students saw the tools’ potential to accelerate their workflows or help bring things into their process that they didn’t have. “What I find most annoying is that these tools don’t isolate parts of an image or do simple green screen kinds of things. They’re designed to make finished images, and artists don’t want that.”

Goodwin is introducing his class to a variety of AI tools. “I definitely use Photoshop to show them the way Adobe is thinking about AI.” He points to their beta tools for wire cleanup, and notes they take a very conversative position about their ethical use. Goodwin also has a subscription to Runway and uses it quite a bit. “Runway saw the wisdom of giving credits to my students,” he observes. At a time when subscription prices for AI tools can be daunting, that’s no small matter.

Goodwin is a strong proponent of open-source tools like ComfyUI, a node-based application for creating images, video and audio that was released by GitHub two years ago. “It’s all free,” he says. “We have AI that can define a character in an image so you can do a kind of automatic green screen. They can have the character removed from the background, and they can change that to a moonscape. If they don’t want the moonscape, but just the little green men — they can pull them out and resynthesize them to look like chocolate chip cookies. That’s all available.”

Also on his class menu is the image translation tool Pix2Pix, which allows students to generate an image in their Cintiq sketches. “They can work with a database of handbags or cats or flowers and get imagery projected onto unusual surfaces,” explains Goodwin. “It’s a great way to noodle because it’s fast, and they find weird associations that they wouldn’t have found otherwise.”

Goodwin is also optimistic about tools like LoRA (Low Rank Adaptation) from Cloudflare. “It’s new way to make a model and tune it to capture your own style.” While the popular press has made much out of the errors in some AI outputs — like images of six-fingered characters — Goodwin thinks that for art students developing new ideas, that is a feature, not a bug.

As Goodwin describes it, using AI tools today is part fun-house mirror, part ‘pick your own adventure.’ “Innovation happens fast. It’s not pretty or easy to use. But this IS happening, and it’s moving towards the way artists want to use things. It’s a wild ride.”

Savannah College of Art and Design

Edward Eyth joined SCAD as Professor of Production Design in 2019, bringing with him a decade of experience as Creative Director of The Jim Henson Company. In his class, “Digital Rendering for Entertainment,” Eyth’s focus is on 2D digital sketch and painting skills primarily using Photoshop. “It’s a prerequisite for using AI effectively,” he observes. “There has to be a foundation of understanding based on traditional design and arts education for students to be able to curate images in ways the make the best use of the AI process.”

After experimenting with AI for two years, Eyth concludes, “There has never been a time when AI produced an image that didn’t require editing and compositing for refinement.” In class, he uses the AI tool Midjourney in demos, saying “It’s been trained on the largest set of source data and often provides the most elaborate results.”

“The more dedicated design programs like Newarc, Vizcom, Hypersketch and Firefly are the ones I encourage students to experiment with. The AI lessons we focus on in class are meant to take an existing student design and render it in color, place it in an environment for context, and add recognizable elements for scale and proportions. Renderings that used to take hours or even days to generate can now be developed in just minutes using AI.”

Eyth explains, “For now, the lessons are intended to take the students’ creative work through the design process, and only then invite them to enter the AI space to explore options.”

In his own work, he notes, “I have found I spend less time generating concepts, and more time evaluating concepts. ‘Scrutinize and optimize’ has become a more relevant part of the workflow, at a much faster rate than ever before. Some AI programs will generate an idea, render it in a photorealistic style, and provide a rough 3D model (they get less rough with each app update) in a matter of minutes. A process that would take weeks or months can now be done in a single morning or day. That’s the part I find most exciting and why it is so important that we are introducing students to this new paradigm in the creative/design workflow.”

As his students prepare for tomorrow’s jobs, Eyth expects they’ll be adapting to the rapid demands for AI fluency. “Career success used to be determined by ability; now it’s equally about agility.”

At heart, Eyth considers himself a ‘techno-optimist. He believes AI presents a once-in-a-lifetime opportunity to spur revolutionary thinking. “AI will work as an exoskeleton for our minds.”

New York University Tisch School of the Arts

Sang-Jin Bae and Ariana Taveras have designed a course called “Generative AI For Virtual Production” as part of NYU’s Masters of Professional Studios program. This 14-week course, which commenced last fall at NYU’s Martin Scorsese Center for Virtual Production, has 22 students from around the world.

Taveras, who’s an Emmy Award-winning alum of Saturday Night Live, explains, “We’re not just teaching technology; we’re teaching storytelling. So, they’re learning the software coming from a more narrative background.”

Professor Bae, who’s been spearheading NYU’s Virtual Production curriculum since 2020, says, “We’ve been following AI tools like DALL-E, Midjourney and ChatGPT. When we started exploring the web-based software Runway, we found that it was a filmmaker’s tool. Before AI became a ‘thing,’ Runway had greenscreen keying tools that made compositing a lot faster and easier.”

Their goal is to give students hands-on experience at building immersive worlds, designing characters and creating a compelling narrative. Their workshop approach includes structured exercises, with the eventual goal of the students’ producing a 10-minute film.

“They only have 14 weeks to produce the script, the previz and a rough-cut edit,” explains Bae. “They have to create all the assets through Blender, Maya, Unreal and the Adobe suite. They’re all on-site in the computer lab.”

To provide hands-on guidance about integrating Runway’s AI tool into the process, Bae and Taveras brought in an expert, Adjunct Professor Leilani Todd. She’s part of Runway’s Creative Partner program, which is focused on building user relationships with the production community. (Todd’s own AI imagery can be seen at www.floamworld.com.)

“The students had been experimenting on their own using Runway and Midjourney to iterate proofs of concept,” notes Bae. “We loved that gumption, but it was clunky.” (Student experimentation is possible because their NYU tuition covers the cost of licenses for tools like Runway. That is not yet a ‘given’ when it comes to educational licenses industry wide.)

Notably, the well-known ChatGPT tool is especially popular among NYU’s foreign-born students because it helps them articulate their ideas for classes conducted in English. As Taveras notes, “Our students come from seven countries, including South Korea, China, Russia and Saudi Arabia. When they leave here, they will know the lingo and have a good knowledge basis.”

It remains an open question about how AI fluency will play a role in students’ ability to land jobs after clinching their master’s degrees. Will AI automate the types of repetitive tasks that often provide a gateway to the industry for new hires? Bae recalls that his own fluency in PowerPoint and HTML helped him find work after graduating from NYU. “Is AI the new calculator?,” he wonders. “It is still hotly debatable how the tedious jobs in production will be handled by AI. We’ll have to see how the market corrects itself.”

Ringling College of Art and Design

Rick Dakan is at the forefront of bringing AI to the game development program at Ringling, where he has been teaching since 2016. In 2023, he first taught the course, “Writing with AI,” and then organized the AI Symposium held at Ringling last September. This year, Dakan is teaching “Topics in AI: AI For Game Development.”

This hands-on course is designed to help students build their knowledge of AI tools and apply them to their own original game ideas. They create and test their prompt libraries; develop multiple iterations of their games; conduct playtesting sessions and create promotional materials for their finished work.

The tools Dakan has chosen for this class include Claude Pro, which developer Anthropic is providing for free. Dakan notes that “Students also feel comfortable using Adobe’s Firefly tool because Adobe’s training data has a clear ethical source, and they have access to Adobe’s Creative Suite as part of their tuition.” Students can choose to incorporate additional AI tools, but they will have to cover those subscription costs themselves.

Dakan’s current class of 17 is a mix of students from different Ringling majors, including Computer Animation, Game Art, VR and Visual Studies. “It ranges from students who have some experience and want to ‘up’ their skills, to others who’ve never tried any of this,” he remarks. “There’s a difference between knowing what’s happening and what you can do with it. Some students have typed prompts into Midjourney, DALL-E or ChatGPT and gotten funky results. But even with a little bit of expertise, they’re surprised that they can go deeper.”

Dakan has developed a framework for AI fluency, which focuses on prompt-crafting along with being able to describe to the AI what you want to have happen and then discern the outputs that it gives you. “With AI, there will be weird stuff, which is easy to pick out. The insidious stuff is the mediocre stuff — the ‘good enough’ stuff. That’s where the death of creativity really lies.”

“I’m focused on them documenting their process,” Dakan continues. “AI can generate a bunch of stuff very quickly. But that’s just the first step. They have to respond to the prompts and adjust the outputs in order to develop a real awareness of the process. They need to learn what these tools are good at, and what they’re not good at.”

Dakan has learned that students entering the work world are being asked in job interviews about their AI skills. So, to support the readiness of Ringling graduates entering this new world, the college is inaugurating an online certificate program this coming summer called “AI For Creatives.” His current course will be part of that program.

Because we’re still in the early days of the AI revolution, Dakan has noticed that he rarely has students who arrive in his classes feeling ‘pro-AI.’

“But once they see places where it’s useful, they develop a more nuanced view. We’re ‘pro-Artist’ in the world of AI.”

School of Visual Arts

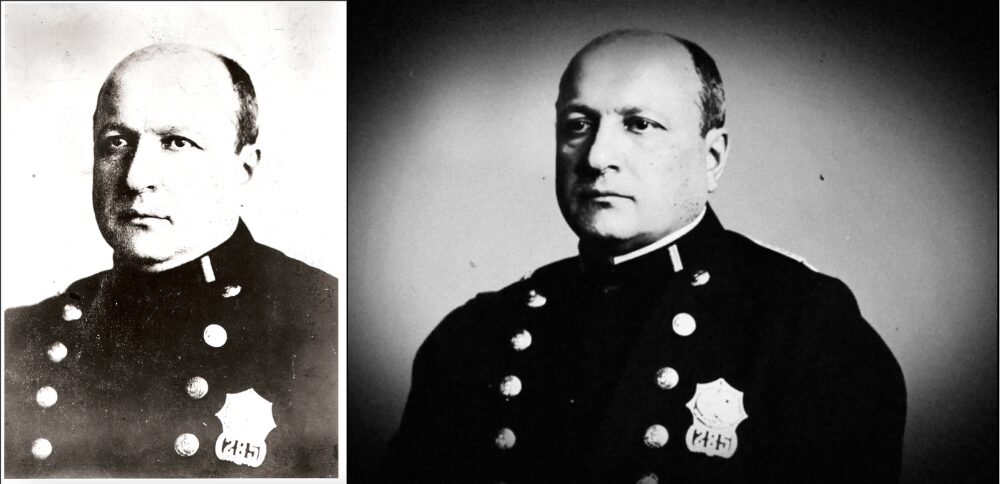

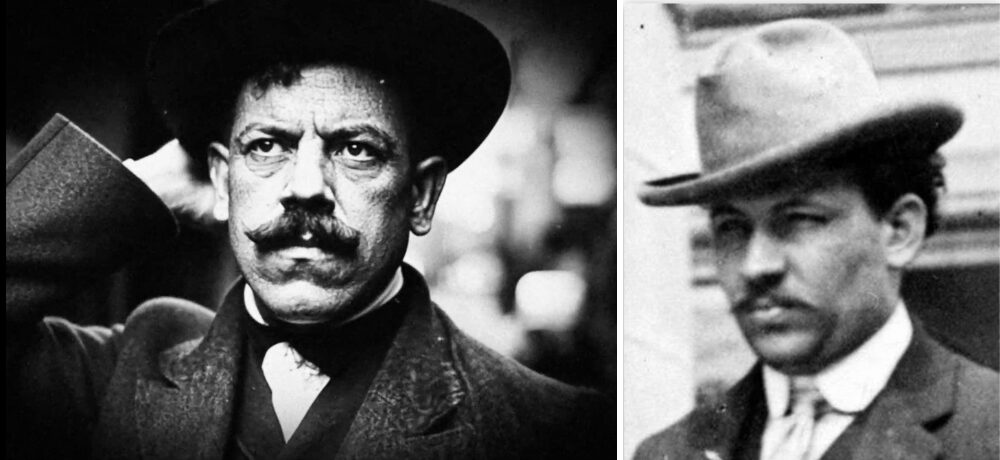

Anthony Giacchino arrived at New York’s SVA in the fall of ‘24 to teach an AI-augmented class from the last place you’d expect — documentaries. The Emmy and Oscar-winning filmmaker (Colette, Great Moments from the Campaign Trail) has worked on a variety of projects for Marvel, Disney, Warner Bros., Paramount and PBS. But taking on the challenge of teaching “AI and Filmmaking: A Critical Exploration” provided the chance for using AI tools to re-create a New York City tale from the early 1900s. He thought the fusion of historical recreation with AI might be a route to evoke a long-gone milieu.

“It was like fulfilling the dream of time travel — typing a year into a time machine and seeing images of what it was like back then,” Giacchino recalls. He began by taking his nine Masters of Fine Arts students to explore places in Manhattan’s Little Italy, where the true tale of Detective Joe Petrosino had played out.

Most of Giacchino’s students were foreign-born — from China, South Korea, Thailand and Poland — so doing a film about newcomers to New York seemed apt. After photographing the actual sites where Petrosino had worked, the students faced the challenge of taking their archive-heavy deep dive and making their research come alive through AI.

“We started with Midjourney, which allowed them to create imagery that was then brought into Runway and animated,” Giacchino explains. Then they used Photoshop and After Effects. The students made vintage shots of the Statue of Liberty come alive by doing things like animating ships sailing past it. Only four images in the 11-minute silent film could be considered ‘real’ — the rest were generated. “Seeing the generated footage as if it had been taken from a film from that time blew us away.”

Which is not to say that believable AI outputs were easy to achieve. Giacchino recalls, “It was hard to get the police uniforms of the time to look correct. Even with references, Petrosino kept coming out looking like a fascist dictator!”

As a means to see a ‘proof of concept’ for ‘documentaries’ like this, Giacchino thinks AI offers an interesting path. “We’re used to seeing recreations in documentaries. The difference now is that you can create something so convincing that you can potentially pass off something inaccurate as real. And that’s dangerous.”

But Giacchino is hardly an alarmist. He points out that the smartphone revolution put cameras in our pockets, but that didn’t turn us all into professional photographers. He thinks AI tools are at a similar point of development. His students may have forged a new path with this approach to ‘documenting’ history. How fellow documentarians may use it is going forward is still unknown. But Giacchino plans to be back at SVA next year to teach the course again.

Ellen Wolff is an award-winning

journalist who focuses on education,

technology and animation.

;

Win 'The Art of DreamWorks Dog Man'!

Win 'The Art of DreamWorks Dog Man'!